Azure AI Search: From Exploration to Production

8/12/20251 min read

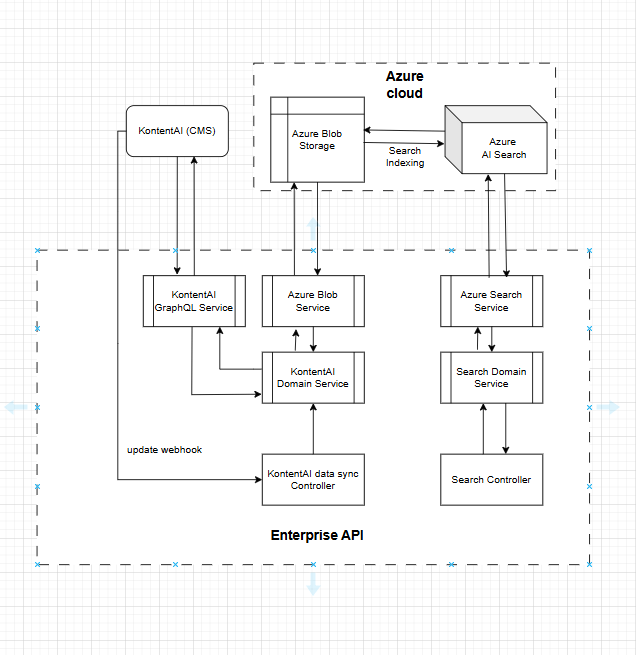

Before diving into code, I like to start with understanding the system I’m working with—its architecture, use cases, and the different ways to approach building with it. For a recent internal project, we were exploring AI agent capabilities, and the choices were vast: different LLMs, frameworks, and stacks. But since my company is deeply integrated with Microsoft technologies, the decision to build on Azure, using Azure AI Search, and our existing tech stack (C#/TypeScript with React) was a natural fit. Here's a high level system diagram.

Getting Started

Since we're using Github Copilot (Claude) we'll be moving fast. The goal is to build a sitewide search for our public facing site. We want to ultimately turn it into a Retrieval-Augmented Generation (RAG) system with embedded chunking and vector searches, but not yet as we have a fast approaching due date. To get to where we want to be, there are a few things that AI Search relies on that we need to first set up.

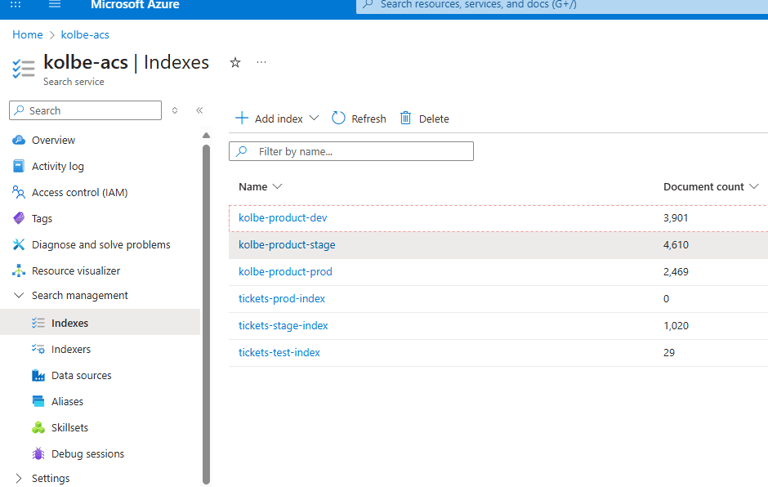

Indexes store the vectorized or structured content that the LLM queries.

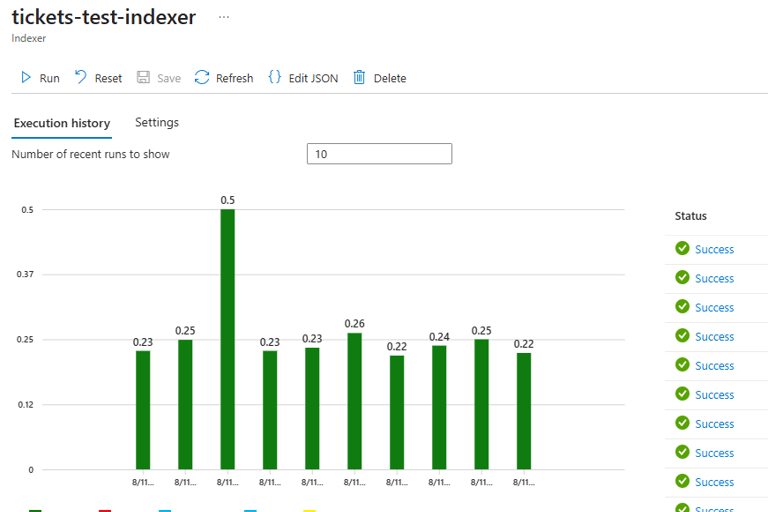

Indexers help keep that content fresh and structured by syncing from your data sources.

Datasource the data that gets indexed for search

Azure Search clients can use different indexes for specialized queries, which opens up powerful possibilities for building intelligent, context-aware agents.